deciBelé

Joshua Tree, California

33.8734° N, 115.9010° W

AI Vision: 002

Programs: Rhino, Grasshopper, Google API, NanoBanana Pro

Typology: Cultural

Conceptualization

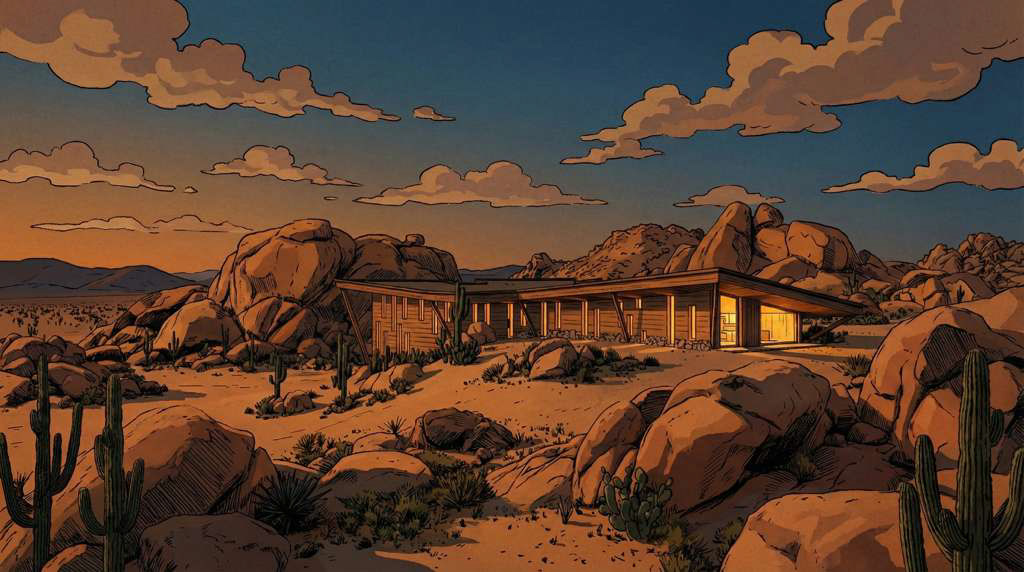

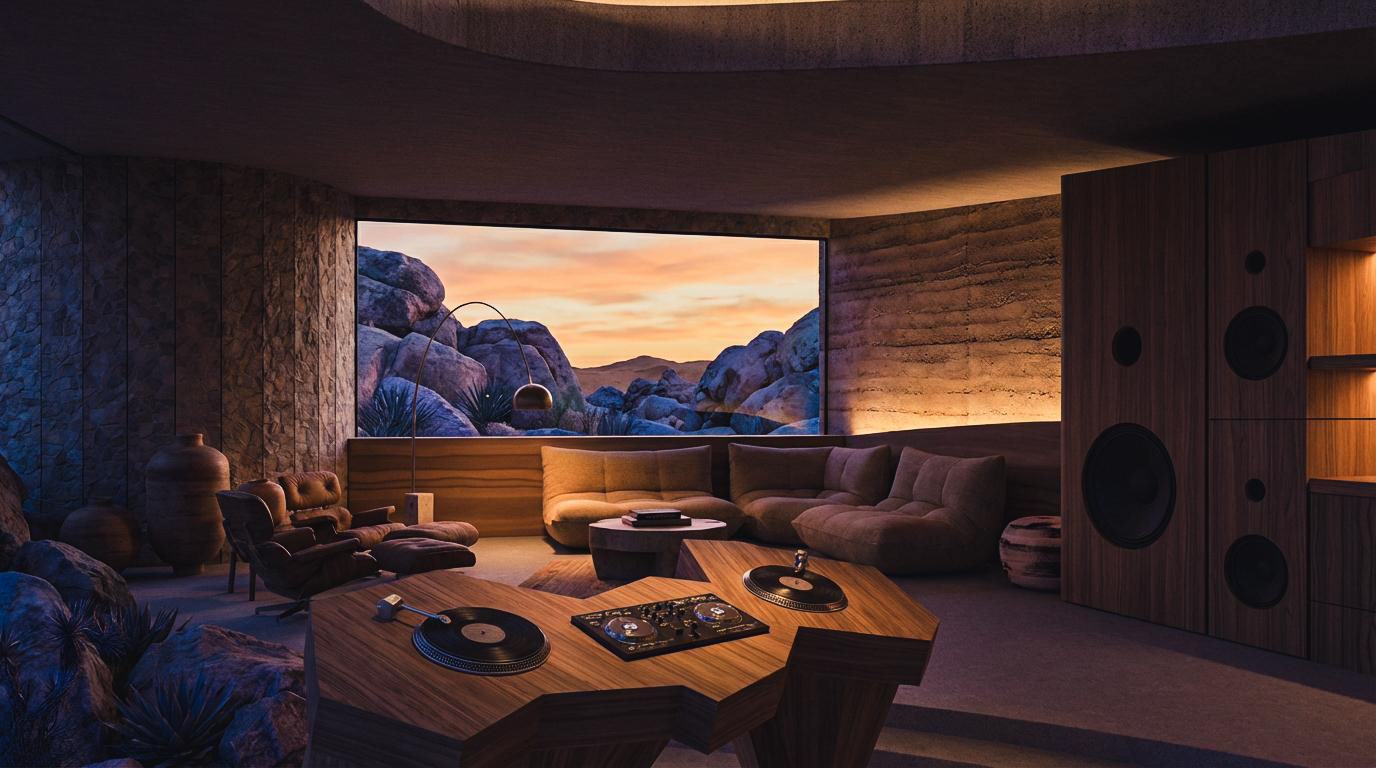

Embedded among boulders and desert flora, the structure opens itself selectively to the landscape while remaining acoustically grounded. A hovering roof plane and thick earthen walls frame a carved central void, where warm interior light contrasts with the cool desert dusk, emphasizing enclosure, calm, and intentionality.

The project was developed through direct AI-integrated design and rendering iteration, where live geometry informed real-time visual feedback rather than post-processed imagery. This workflow allowed spatial, material, and atmospheric decisions to evolve simultaneously—positioning iteration itself as a design driver rather than a final validation step.

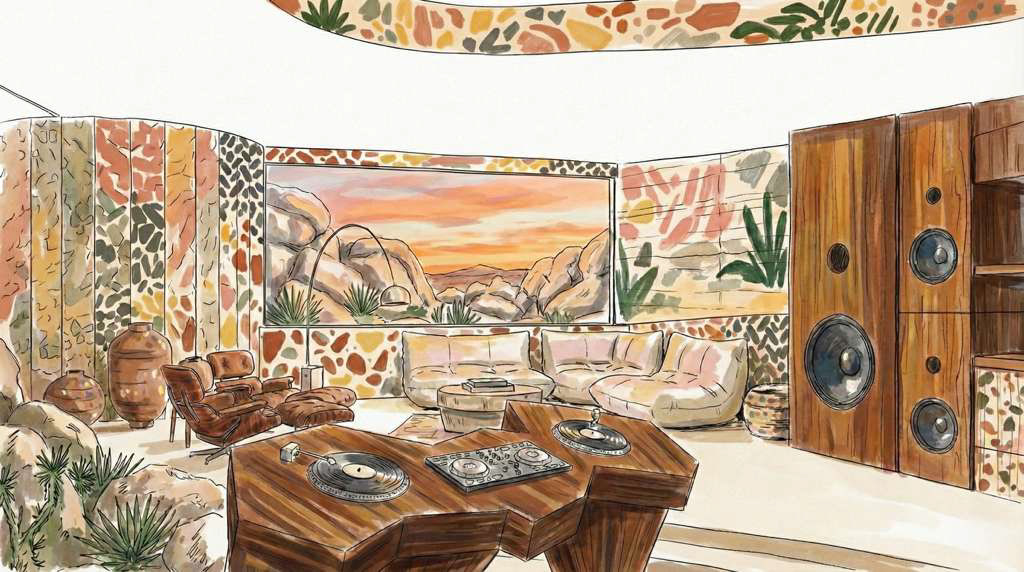

deciBelé is conceived as a high-desert recording studio and listening retreat, where architecture functions as an instrument tuned by mass, silence, and light. Embedded into the terrain, the project prioritizes slowness, isolation, and sensory focus, creating a controlled environment for deep listening, recording, and creative recalibration within the vast quiet of Joshua Tree.

Integrated GenAI Process

For deciBelé, AI-generated imagery is not produced through detached or sequential prompting, but through a live, bidirectional link between generative image models and active Rhino geometry. Visual output is driven directly by the viewport itself—capturing massing, proportion, and spatial intent in real time—so prompts operate as contextual modifiers rather than speculative instructions. This integration ensures that every image remains architecturally grounded, evolving in lockstep with the geometry it represents.

Rather than generating isolated visions, the system enables continuous iteration where form, material, and atmosphere co-develop simultaneously. References, tonal cues, and narrative intent guide the AI’s interpretation of the live model, allowing it to extrapolate experiential qualities without deviating from spatial logic. In this workflow, AI shifts from post-processing tool to design collaborator, transforming Rhino into an adaptive visualization environment where architecture and image evolve as a single, unified process.

Live Geometry, Live Intelligence

For deciBelé, generative AI was integrated natively into Rhino through Grasshopper-driven calls to Gemini (NanoBanana Pro), creating a live feedback loop between active geometry and image generation. Viewport captures were triggered directly from Grasshopper, paired with structured prompts, and sent to the model in real time—allowing AI to interpret massing, proportion, and spatial composition directly from the Rhino scene rather than from detached reference imagery.

Average generation times remained under 10 seconds, making rapid iteration viable at the pace of a design charrette. While true live viewport–to–AI updates are feasible within this framework, they were intentionally not implemented in this iteration of the system to minimize operational cost and token processing overhead. Instead, updates were triggered discretely—preserving designer control over when visual feedback occurred while maintaining a fluid, near-real-time workflow.

This approach reframed AI from a post-processing step into an embedded design instrument. Variations in materiality, light behavior, enclosure, and atmosphere could be evaluated side-by-side against a consistent geometric base, allowing architectural intent to converge quickly. By grounding generative speculation in live geometry and controlled iteration, the charrette process prioritized clarity, continuity, and spatial rigor over spectacle.

Prompt Based Material Intelligence

This workflow replaces traditional material assignment with live, prompt-driven control tied to color-coded geometry groups inside Rhino. Volumes are organized and tagged visually through layer-based color control, then interpreted directly by the AI through language—allowing material, finish, and surface quality to be defined in seconds without opening a material editor or dealing with UV mapping. Geometry becomes a readable legend, and prompting becomes the fastest way to test design intent.

Applying materials in Rhino is optional, not mandatory. When used, it sharpens precision and reinforces intent, but the core shift is that materiality becomes conversational and reversible, not locked in early. Designers can cycle through palettes, textures, and finishes at the speed of thought—collapsing modeling, visualization, and evaluation into a single, fluid loop.